ML Resources For Offsec

My first post on Offensive Machine Learning was in 2018, @monoxgas and I found we could detect a sandbox using hand-crafted features with a Decision Tree. Because a model is just an array of weights + math, we could hide the network in a macro or table, and it. was. awesome. After that little success, machine learning was all I could think about or wanted to do. A year later, we’d go on to present Proofpudding, and apply ML to other small offensive tasks.

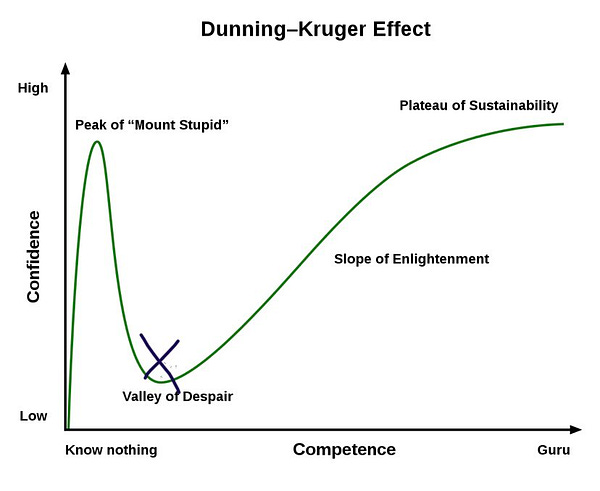

These early projects lead me to find all kinds of cool of prior work - SNAP_R, Markov Obfuscate, Timing Attacks just to name a few. However, despite all the fun I was having, following tutorials to solve a task isn’t a long term solution. So, I made the effort to learn some fundamentals, and I’ve at least learned enough to make jokes about how much I still don’t know.

The learning curve is reasonably steep, but communities like the AI Village are beginner friendly. Below are the resources I started with and continue to reference. They are good top-down references, some are deeply technical but overall do a good job of keeping the required intuition accessible.

*Similar (pick one)

Human Learning

Make Your Own Neural Network by Tariq Rashid

Applied Text Analysis with Python by Benjamin Bengfort, Rebecca Bilbro, and Tony Ojeda

Inside Deep Learning* by Edward Raff

Python Machine Learning* by Sebastian Raschka and Vahid Mirjalili

Natural Language Processing With Transformers by Leandro von Werra, Lewis Tunstall, and Thomas Wolf

Designing Machine Learning Systems by Chip Huyen

Math

Dive Into Algorithms by Bradford Tuckfield

Linear Algebra for Everyone* by Gilbert Strang

Introduction to Linear Algebra* by Gilbert Strang

Grokking Algorithms by Aditya Y. Bhargava

Optimum Seeking Methods by Douglas C. Wilde

TBD

Adversarial Machine Learning

Stealing Machine Learning Models via Prediction APIs by Florian Tramèr, Fan Zhang, Ari Juels, Michael Reiter, and Thomas Ristenpart

HopSkipJumpAttack: A Query-Efficient Decision-Based Attack by Jianbo Chen, Michael I. Jordan, Martin J. Wainwright

Quantifying Memorization Across Neural Language Models by Nicholas Carlini, Daphne Ippolito, Matthew Jagielski, Katherine Lee, Florian Tramer, Chiyuan Zhang

Model Inversion Attacks that Exploit Confidence Information and Basic Countermeasures by Matt Fredrikson, Somesh Jha, and Thomas Ristenpart

Black-box Generation of Adversarial Text Sequences to Evade Deep Learning Classifiers by Ji Gao, Jack Lanchantin, Mary Lou Soffa, Yanjun Qi

Seq2Sick: Evaluating the Robustness of Sequence-to-Sequence Models with Adversarial Examples by Minhao Cheng, Jinfeng Yi, Pin-Yu Chen, Huan Zhang, Cho-Jui Hsieh